About aim42

aim 42 supports software evolution, maintenance, migration and improvement - in a systematic and pragmatic way.

|

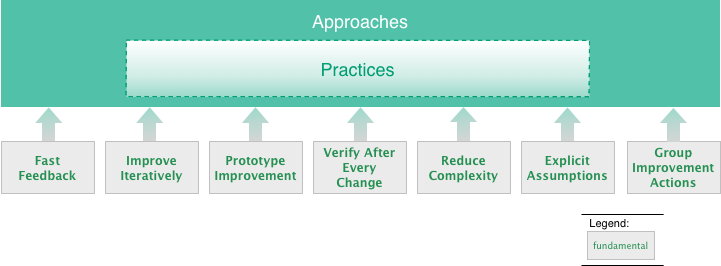

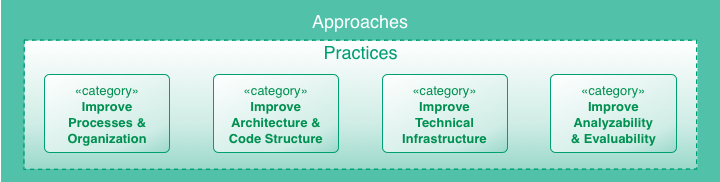

aim42 is a collection of practices and patterns to support software evolution, modernization, maintenance, migration and improvement of software systems:

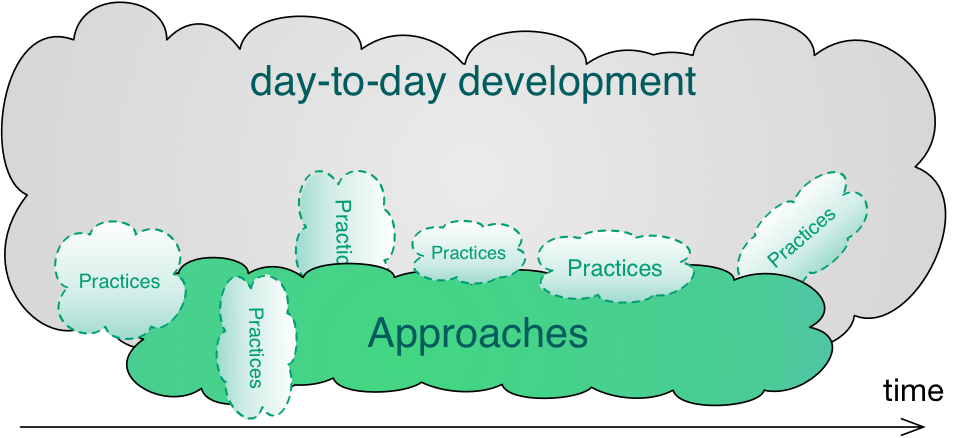

aim42 seamlessly integrates with your day-to-day development work. |

Authored by the aim42 community, lead by Dr. Gernot Starke <gernot.starke@innoq.com>

1. Introduction

1.1. Overview

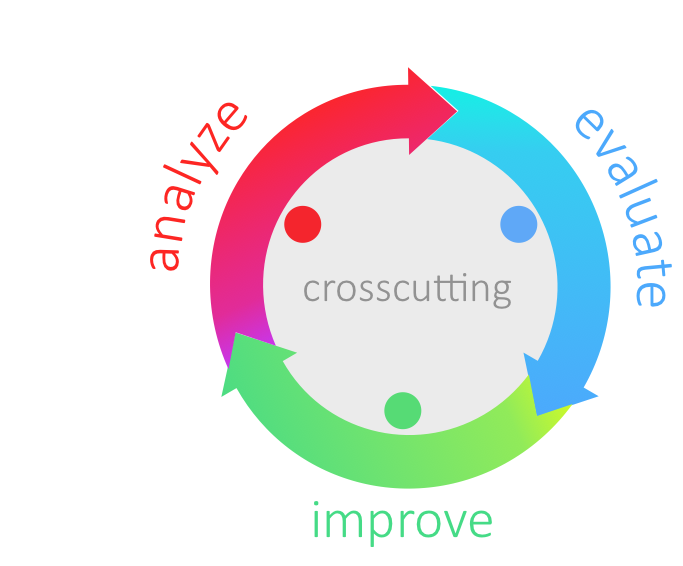

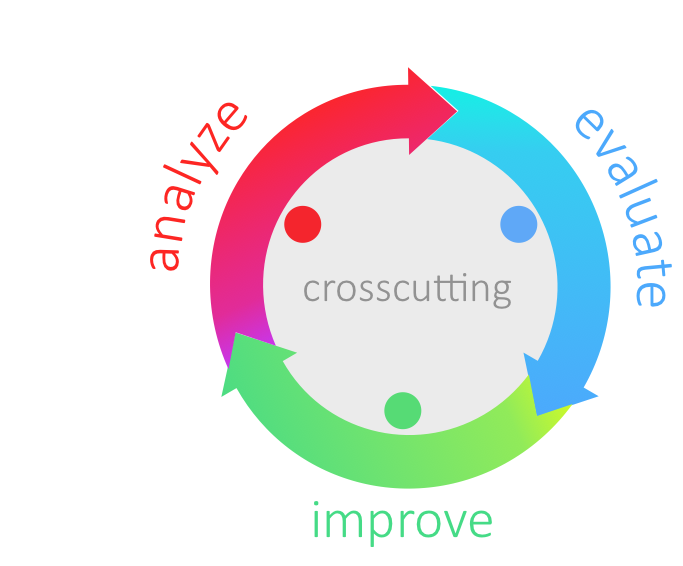

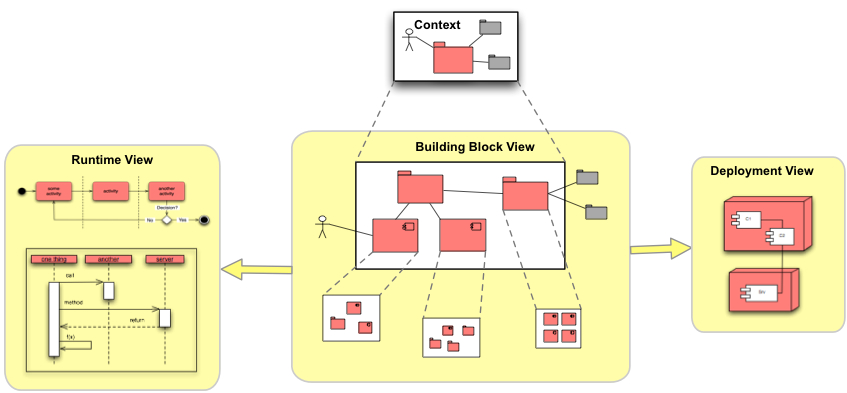

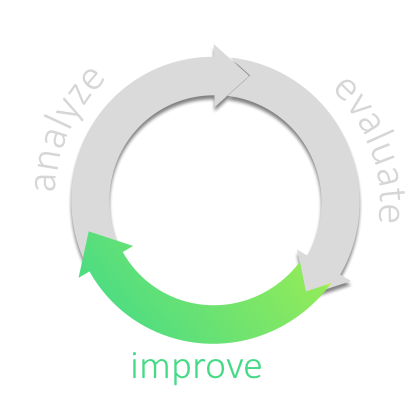

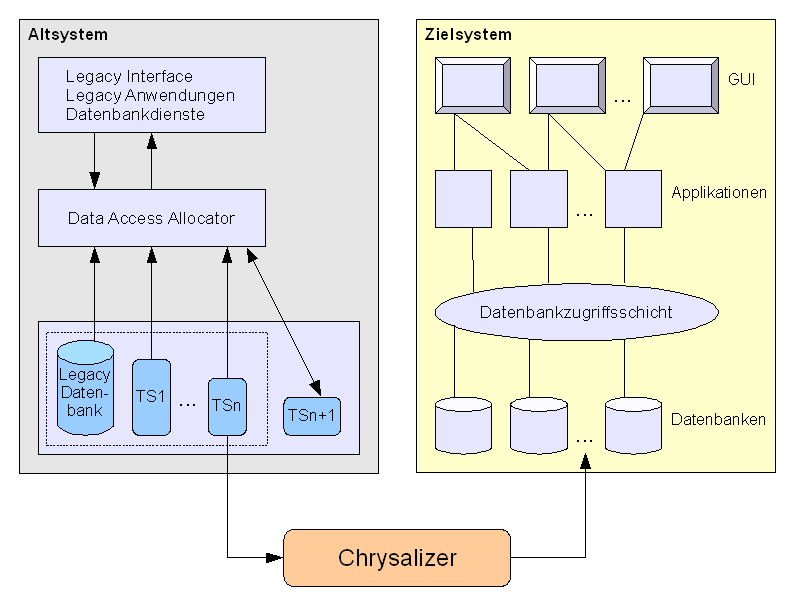

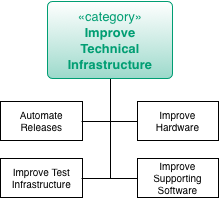

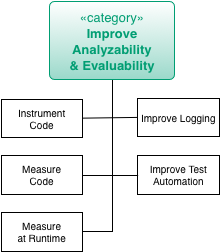

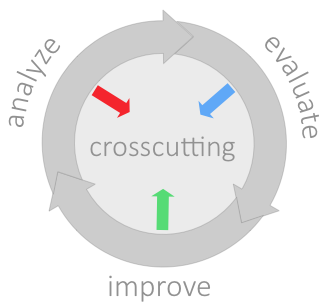

aim42 organizes software improvement in three major phases (Chapter 2, Analyze, Chapter 3, Evaluate and Chapter 4, Improve), build around some crosscutting activities.

Analyze |

Evaluate |

Improve |

Understand the system |

Estimate issue cost: How grave is this problem? |

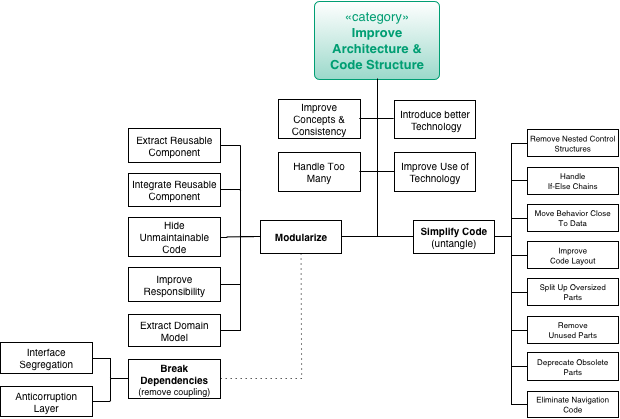

Improve architecture and code |

Find issues and risks |

Estimate improvement cost: How expensive is this change? |

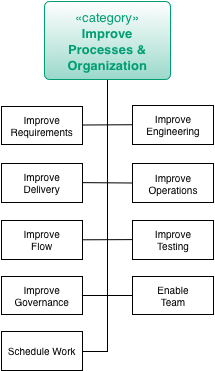

Improve processes |

Collect improvement options |

Usually "evaluation" means estimation |

Improve technology |

Interview stakeholders |

Estimate in intervalls |

Improve (technical) concepts |

Analyze context |

Evaluate tradeoffs |

|

Analyze architecture and code |

||

Crosscutting |

||

Manage issues (risks, problems, symptoms, root-causes) |

||

Manage improvements |

||

Manage the (m:n) relationships between issues and improvements |

||

Plan improvements, interleaved with to day-to-day activities |

||

Verify improvements (check if improvements resolved appropriate issues) |

||

1.2. Why is software being changed?

Software systems, at least most of those that are practically used, are changed all the time. Features are added, modified or removed, user interaction is streamlined, performance is tuned, changes to external interfaces or systems are reflected. The reasons for changing a system can be grouped into four categories (see [ISO-14764]):

-

Corrective changes

-

fixing failures within the software system

-

-

Adaptive changes

-

data structures we rely on have been changed

-

external interfaces have been changed - our system has to cope with these changes

-

some technology, framework or product used within the system is not available any longer and needs to be replaced

-

-

Perfective changes

-

operational costs have to be reduced

-

maintenance costs have to be reduced

-

existing documentation does not reflect the truth (any more)

-

resource consumption needs to be optimized

-

system needs to work faster

-

system needs to become more reliable or fault-tolerant

-

people need new features

-

system needs to be integrated with new neighbour

-

system needs to comply to new regulations or laws

-

system needs new or improved user interface

-

existing features have to be modified or removed

-

-

Preventive changes

-

technical debt has to be reduced

-

You see - lots of good reasons :-)

1.3. Why does software need improvement?

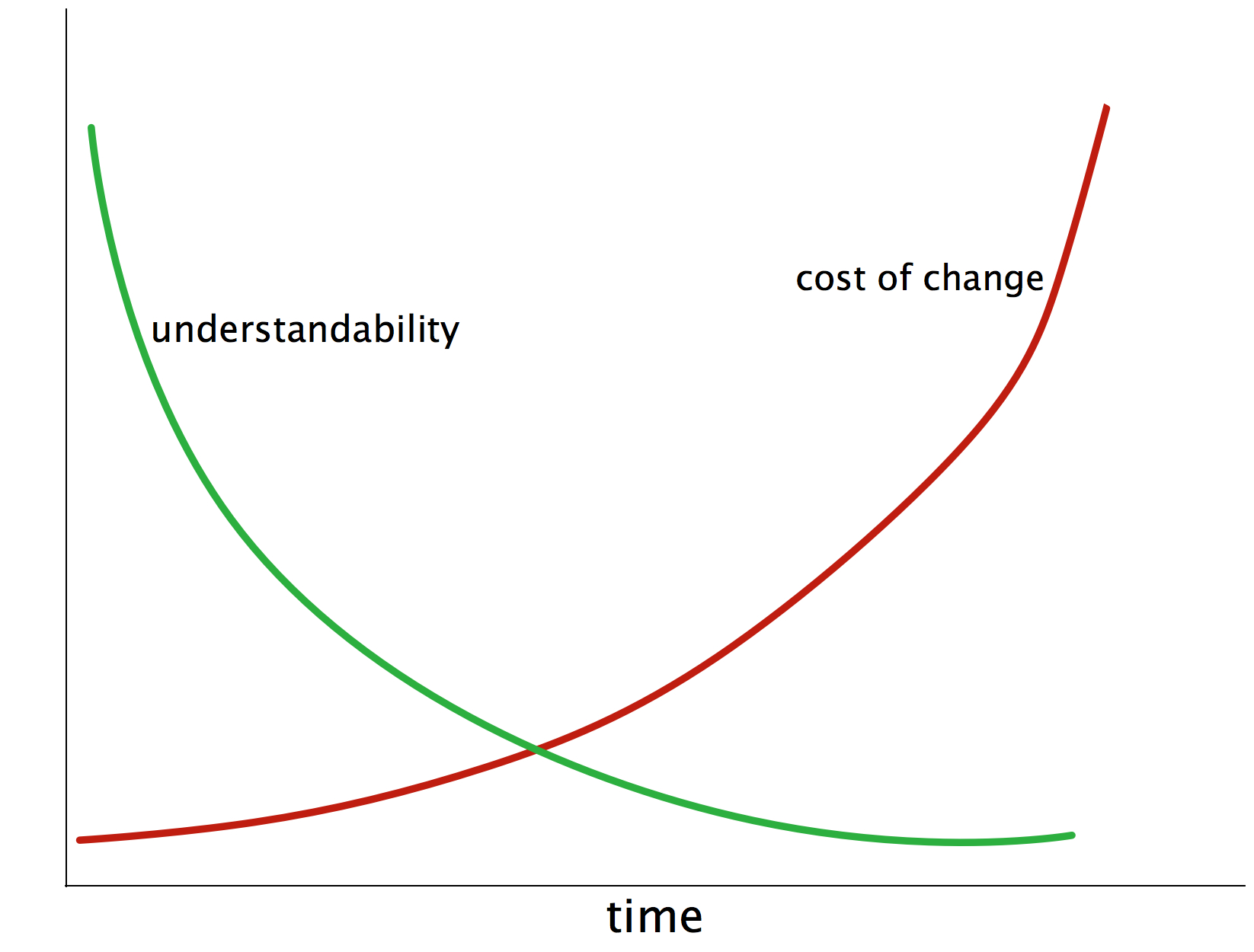

The most important reason is depicted in the following diagram: The cost-of-change of most software increases heavily over time… making those people really unhappy that have to pay for these changes (called maintenance, evolution, new-features or else).

An additional effect of long-term maintenance of software is the strong decrease in understandability: When a system matures it becomes more and more difficult to understand its inner workings, changes become increasingly risky and consequences of changes become difficult to foresee which can lead to quite blurry effort estimations.

These negative effects share a few common root causes:

-

lack of conceptual integrity

-

internal disorder

-

overly complex internal structure, either of source code or data

-

overly complex concepts (cross-cutting solutions for fine-grained problems)

-

overly complex or inappropriate internal processes

-

inappropriate selection of technology (frameworks, libraries or languages)

-

(you surely can find a few more…)

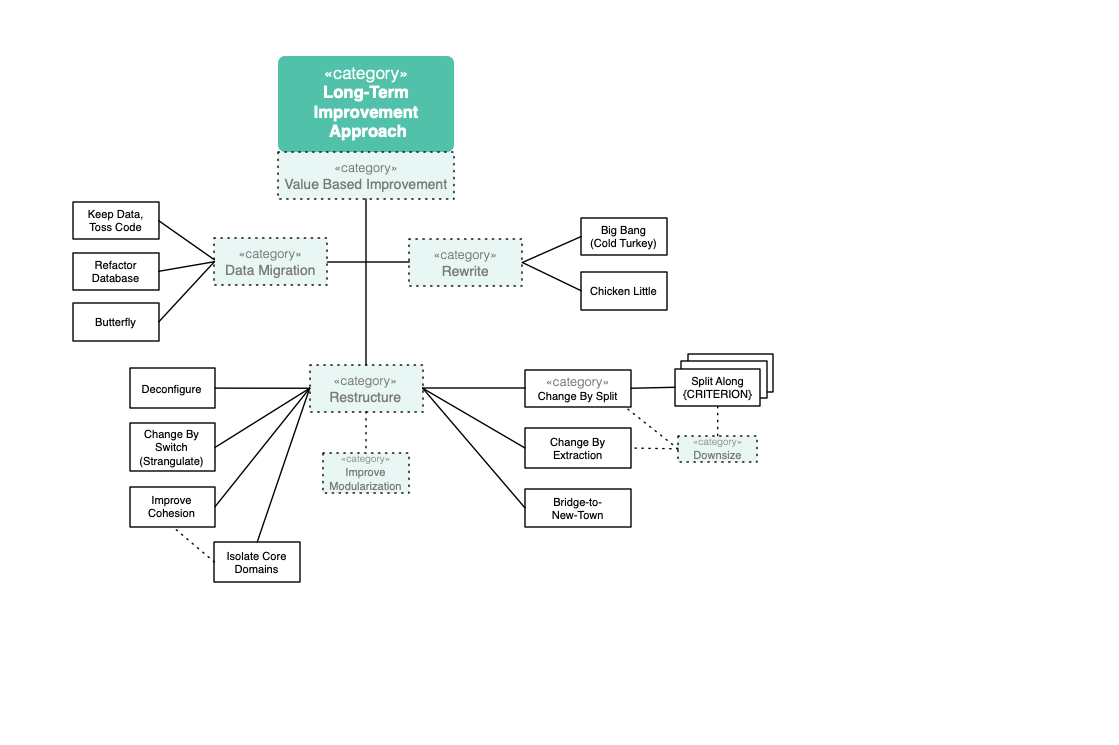

1.3.1. Long-term Goal

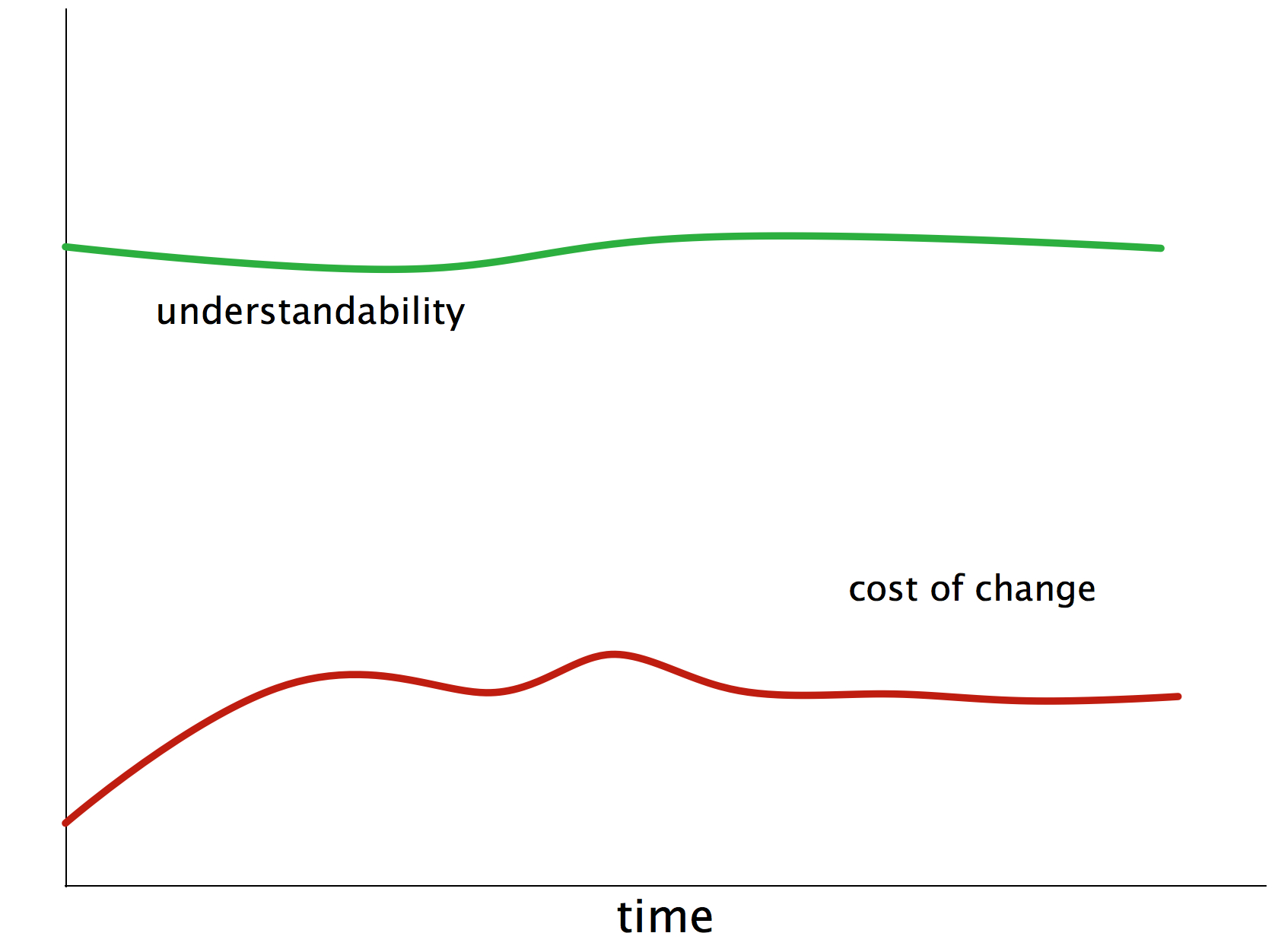

In the beginning, though, everything was fine: nice coupling and cohesion, appropriate technologies, well written code, understandable structures and concepts (see figure Figure 3, “Goal: Maintainable Software”)

But as more and more changes, modifications, tweaks and supposed optimizations were performed under growing time and budget pressure, things got nasty. The maintainers piled up so called technical debt (we software folks call it quick-hacks, quick-and-dirty-fixes, detours or abbreviations). We’re quite sure you know what we’re talking about - we experienced it over and over again, it seems to be the normal situation, not the (bad) exception.

Investment in methodical and systematic software architecture improvement will have the following effect.

1.4. How does aim42 work?

1.4.1. Three Simple Phases

aim42 works in a phased iterative manner:

-

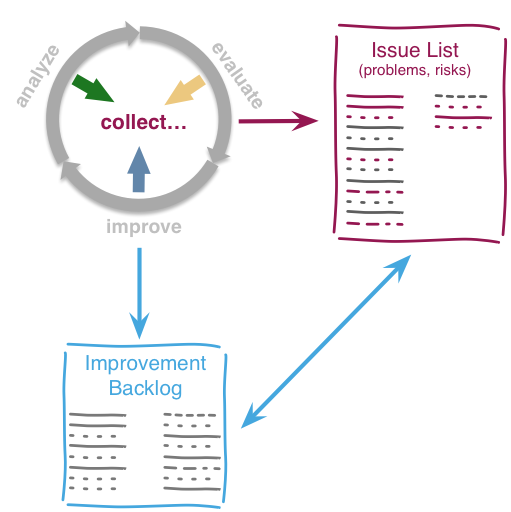

Chapter 2, Analyze: collect issues: problems, risks, deficiencies and technical debt within your system and your development process. Focus on problems in this phase, not on potential solution approaches. In addition, develop (and document) an understanding of internal structures, concepts and architectural approaches.

-

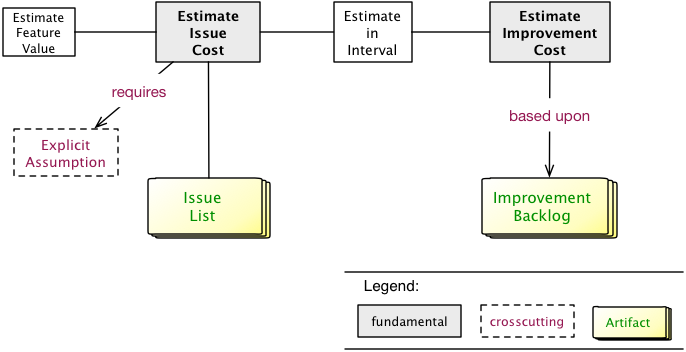

Chapter 3, Evaluate: determine the "value" of issues and their solutions (improvements)

-

Chapter 4, Improve: systematically improve code and structures, reduce technical debt, remove waste and optimize.

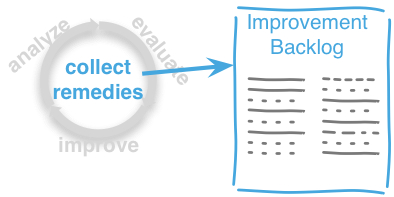

These three phases are performed iteratively - as explained below. Several cross-cutting practices and patterns should be applied in all phases, for example documenting results, Section 5.6, “Collect Opportunities for Improvement” or long- and short-term planning activities.

1.4.2. Common Terminology

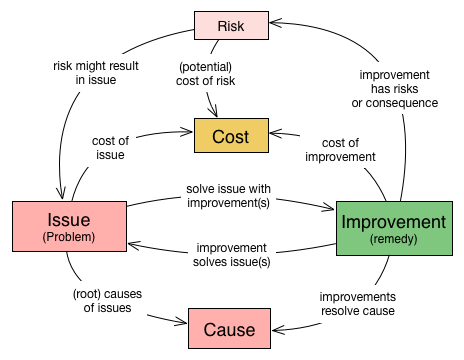

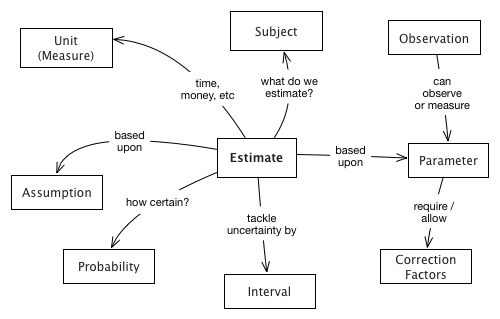

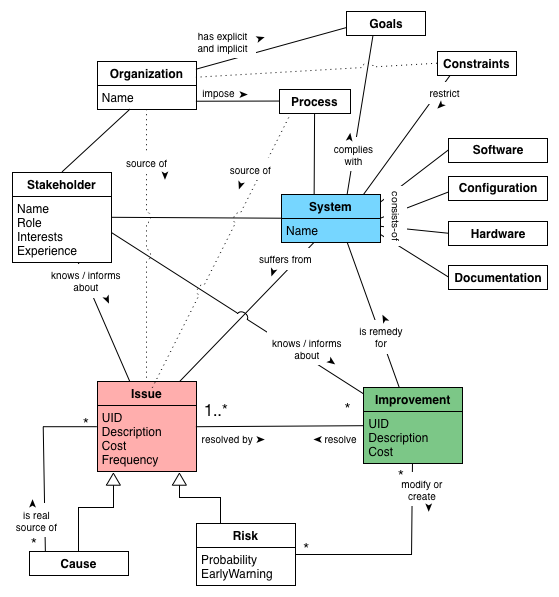

aim42 relies on a common terminology, a small set of fundamental concepts.

Issue |

Any problem, error, fault, risk, suboptimal situation or their causes within the [System] or processes related to it (e.g. management, operational, development, administrative or organizational activities). |

Cause |

Fundamental reason for one or several issues. |

Improvement |

Solution, remedy or cure for one or several issues. |

Cost (of issue) |

The cost (in any unit appropriate for business, e.g. money, effort or such) of the issue, related to a frequency or period of time. For example – cost of every occurrence of the issue or recurring cost per week. |

Cost (of improvement) |

The cost (in monetary units) of the improvement, remedy, tactic or strategy. |

Risk |

Potential problem. Improvements can change associated risks for the better or the worse, even create new risks. |

See also the more detailed Appendix A, Domain Model (not required for the casual reader)

1.4.3. Iterative Approach

In compliance with modern agile development methodologies, aim42 fundamentally depends on iteration and feedback between the phases.

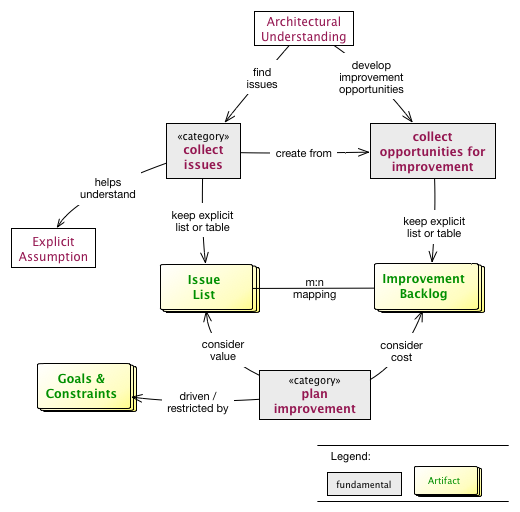

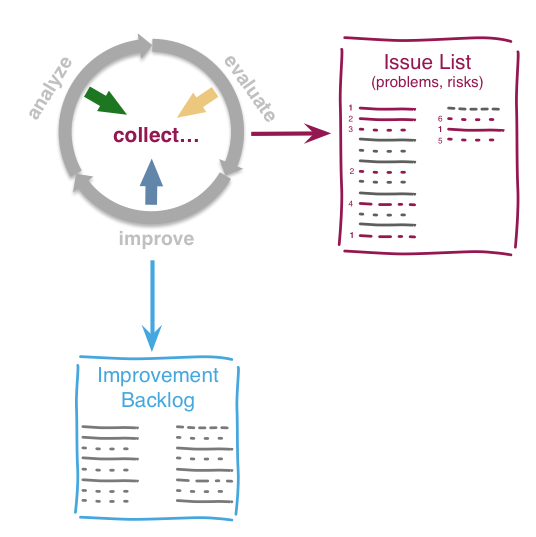

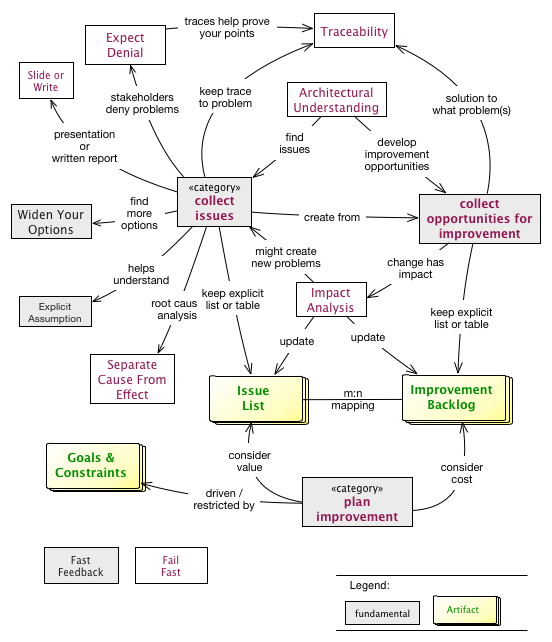

Within each phase, you collect both issues and opportunities for improvement, as depicted in the illustration below:

Issues and improvements need to be

-

related to each other: No idea of improvement without an existing issue - as we do not want to optimize "because we can".

-

evaluated in some business-compatible unit (e. g. Euro, $) as described above. See Chapter 3, Evaluate.

1.5. Patterns and Practices Provide No Guarantee

We are very sure that aim42 can work for your system or your organization. But (yes, there’s always a but) we cannot guarantee: Maybe your software is so extraordinary, so very special, that it needs other treatments.

Maybe your organization does not fit our prerequisites or is way more advanced than we anticipated in our approach…

You have to use all practices, patterns and approaches of aim42 at your own risk and responsibility. We (the aim42 contributor team) can by no means be held responsible for any results of applying aim42.

2. Analyze

2.1. Goals

-

Obtain overview of intent, purpose and quality requirements of the system ([System]).

-

Develop and document an understanding of internal structures, concepts and architectural approaches.

-

Find all problems, issues, symptoms, risks or technical debt within the system ([System]), its operation, maintenance or otherwise related processes.

-

Understand root causes of the problems found, potential interdependencies between issues.

2.2. How it works

Look systematically for such issues at various places and with support of various people.

| To effectively find issues, you need an appropriate amount of understanding of the system under design ([System]), its technical concepts, code structure, inner workings, major external interfaces and its development process. |

One serious risk in this phase is a premature restriction to certain artifacts or aspects of the system: If you search with a microscope, you’re likely to miss several aspects.

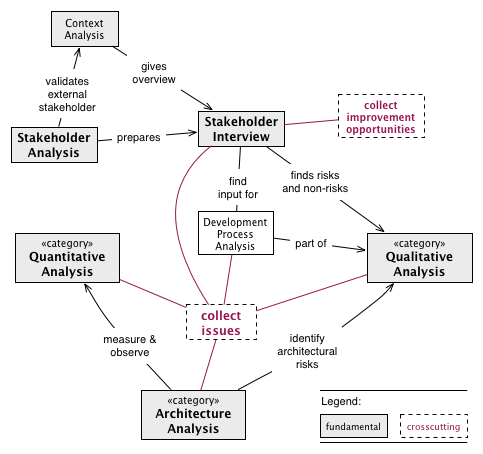

Figure: Overview of Most Important Analysis Practices

Always begin with Section 2.3.23, “Stakeholder Analysis”, then conduct Section 2.3.24, “Stakeholder Interview” with important stakeholders.

Improve your Section 5.5, “Architectural-Understanding” of the [System] by

-

Section 2.3.7, “Documentation-Analysis”, read especially the architecture documentation, focus on Section 2.3.28, “View Based Understanding”.

-

Perform Section 2.3.25, “Static Code Analysis” to learn about code structure in-the-large. This also helps to identify risky code.

-

Section 2.3.2, “Capture Quality Requirements” from the authoritative stakeholders of the systems.

-

Conduct a Section 2.3.14, “Qualitative Analysis” of the system, its architecture and associated organization, based upon the specific quality requirements

-

-

Inspect and analyze all involved organizational processes - (development, project management, operations, requirements analysis)

-

Perform Section 2.3.21, “Runtime-Analysis” or Section 2.3.15, “Quantitative-Analysis”, e.g. performance and load monitoring, process and thread analysis

-

-

Inspect the data created, modified and queried by the system for structure, size, volume or specialities

Finally, conduct a Section 2.3.20, “Root Cause Analysis” for the discovered major issues in close collaboration with the appropriate stakeholders.

| Never start solving problems until you have a thorough understanding of the current stakeholder requirements. Otherwise you’ll risk wasting effort in areas which no influential stakeholder cares about. |

2.3. Patterns and Practices for Analysis

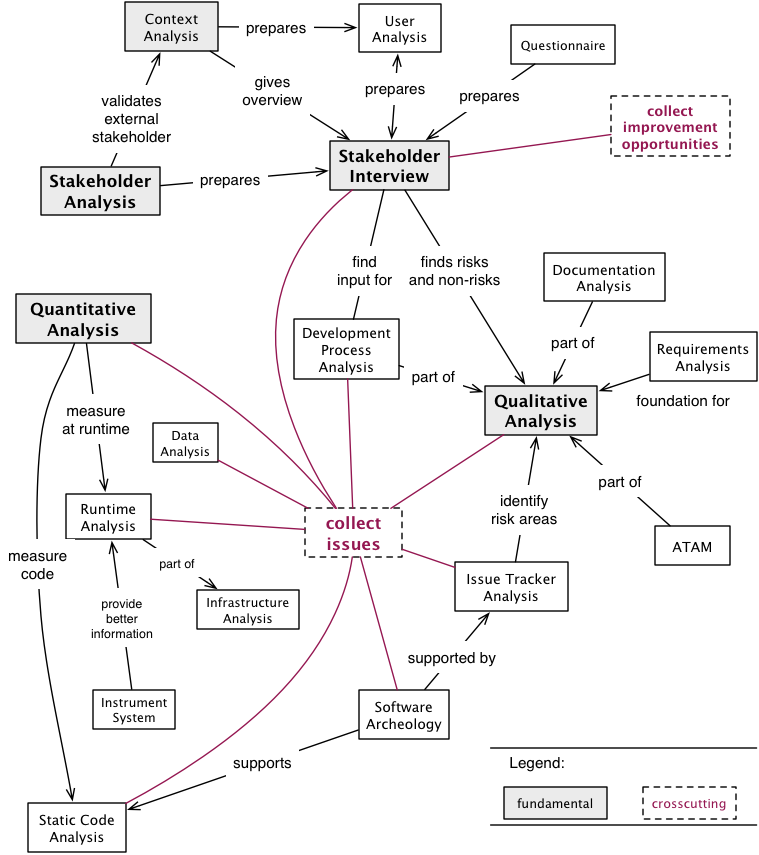

Figure: Detailed overview of Analysis Practices and Patterns

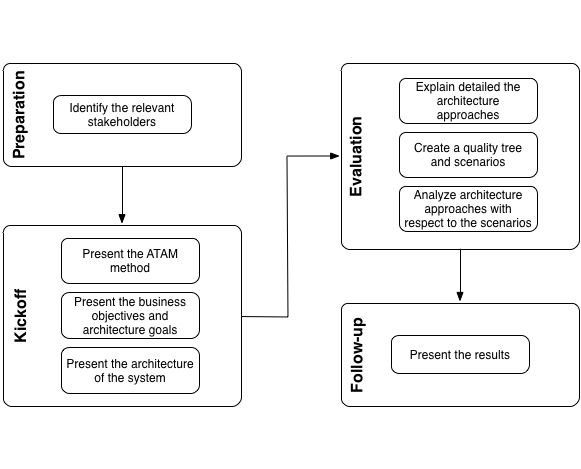

2.3.1. Atam

Architecture Tradeoff Analysis Method. Systematic approach to find architectural risks, tradeoffs and sensitivity points.

Intent

Apply the ATAM method to evaluate the software architecture regarding the compliance with quality goals.

Description

The ATAM method consists of four phases as shown in diagram "Approach of ATAM".

The phases are:

-

Preparation:

-

Identify the relevant stakeholders: The specific goals of the relevant stakeholders define the primary goals of the architecture. Who belongs to these relevant stakeholders has to be determined by a Section 2.3.23, “Stakeholder Analysis”.

-

-

Kickoff:

-

Present the ATAM method: Convince the relevant stakeholders of the significance of comprehensible and specific architecture and quality goals. ATAM helps identify risks, non-risks, tradeoffs and sensitivity points. Calculation of quantitative attributes is not subject of this method.

-

Present the business objectives and architecture goals: Present the business context to the relevant stakeholders, especially the business motivation and reasons for the development of the system. Clarify specific requirements that the architecture should meet, for instance flexibility, modifiability and performance.

-

Present the architecture of the system: The architect presents the architecture of the system. This includes:

-

All other systems with interactions to the [System],

-

building blocks of the top abstraction level,

-

runtime views of some important use cases,

-

change or modification scenarios.

-

-

-

Evaluation:

-

Explain in detail the architecture approaches: The following questions are answered by the architect or developers:

-

How are the relevant quality requirements achieved within the architecture or the implementation?

-

What are the structures and concepts solving the relevant problems or challenges?

-

What are the important design decisions of the architecture?

-

-

Create a quality tree and scenarios: In the context of a creative brainstorming the stakeholders develop the relevant required quality goals. These are arranged in a quality tree. Afterward the quality requirements and architecture goals of the system are refined by scenarios which are added to the quality tree. The found scenarios are prioritized regarding to their business value.

-

Analyze the architecture approaches with respect to the scenarios: Based on the priorities of the scenarios the evaluation team examines together with the architect or developers how the architecture approaches support the considered scenario. The findings of the analysis are:

-

Existing risks concerning the attainment of the architecture goals.

-

Non-risks which means that the quality requirements are achieved.

-

Tradeoff points which are decisions that affect the quality attributes positive and other negative.

-

Sensitivity points which are elements of the architecture that have formative influence to the quality attributes.

-

-

-

Follow-up:

-

Present the results: Creation of a report with:

-

Architectural approaches

-

Quality tree with prioritized scenarios

-

Risks

-

Non-risks

-

Tradeoffs

-

Sensitivity points

-

-

Experiences

The ATAM method:

-

provides operational, specific quality requirements,

-

discloses important architectural decisions of the [System],

-

promotes the communication between relevant stakeholders.

| The ATAM method does not develop concrete measures, strategies or tactics against the found risks. |

ATAM has been successfully applied by many organizations to a variety of systems. It is widely regarded as the most important systematic approach to qualitative system/architecture analysis [1].

2.3.2. Capture Quality Requirements

Description

Invite authoritative stakeholders to a joint workshop (e.g. half- or full-day). Let them write quality scenarios to describe their specific quality requirements for the system. Moderate this workshop.

-

Use scenarios to formulate specific quality requirements.

-

Order those scenarios within a mostly hierarchical quality tree, similar to [ISO-9126].

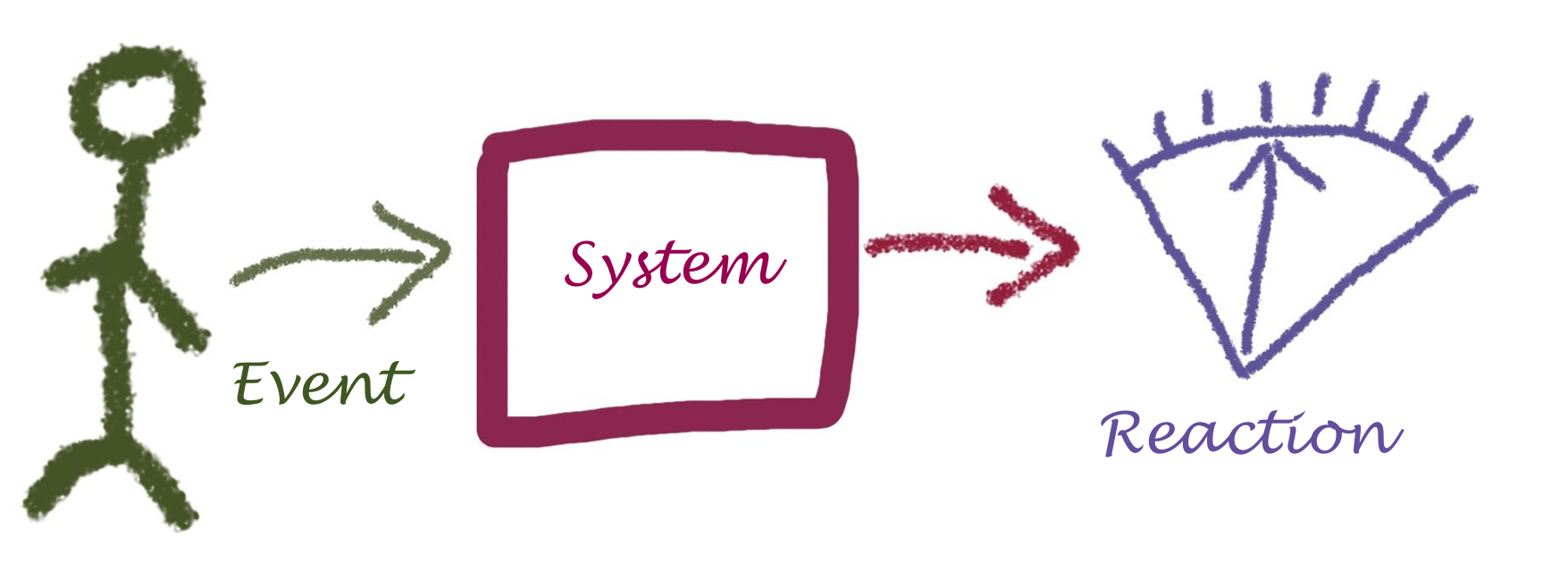

Scenario-based Quality Description

- Scenario

-

describes the reaction of a system to a certain event (or type of event).

Although this definition is concise, it needs some explanation to become understandable. See figure Figure 9, “Structure of Quality Scenarios”:

-

An event can be

-

a user clicking a button

-

an administrator starting or stopping the system

-

a hacker trying to get unauthorized access.

-

-

An event can also be

-

a manager needing another feature

-

another manager wanting to reduce operation costs

-

some government agency requiring financial data to be tamper-proof

-

Context |

The individual processing step AB within use case XY is declared invalid by the regulatory authority and removed from the system. The data processed by the system is not affected. |

Business Goal(s) |

The needed changes to the use case XY can be carried out at low cost and without negative effects. |

Trigger |

The legislator, represented by the regulatory authority, prohibits the use of the AB processing step. |

Reaction |

A developer or architect removes the AB processing step in the system (by deleting the corresponding calls or reconfiguring the process flows). |

Target value |

The change requires a maximum of 24 hours with a maximum of 48 person-hours of effort. After this time, the system is fully working again. |

Constraints |

This change has no effect on the existing data of the users/customers in the system regarding the XY application case. An (automatic) migration of some data is permitted, but must not exceed the 24-hour limit. |

Such a scenario makes it clear to everyone that not only business functionality is needed to achieve the project’s goals. It makes technical requirements (in the example above: modifiability) visible to non-technical stakeholders by providing traceability from the business goal to the technical details.

Experiences

-

Needs moderation: Brainstorming quality requirements usually works well in moderated workshops. If given (even trivial) examples, every stakeholder will most likely write down several scenarios. We often received 80-120 different scenarios in one-day workshops.

-

Uncovers problems and risks: Scenarios collected within brainstorming sessions often contain hidden problem descriptions, risks or problems with the current systems.

-

Covers organization and process: Scenarios sometimes cover process or organizational aspects (like release cycles should be faster than they are now). Move those to your Section 5.14, “Improvement Backlog”.

-

Improves human communication: Different stakeholders often start communicating about the system or their requirements during such workshops. This kind of interaction should have happened long before…

Applicability

Use this practice when (authoritative) stakeholders are available for discussion or a workshop.

If you have well-documented, specific and current (!) quality requirements available, you might consider skipping this practice for now (although we’re quite sure it’s a good opportunity to learn a lot about the system, its stakeholders, their requirements and opinions).

Also Known As

-

Non-functional requirements (although this term is misleading, as functional requirements strongly influence the quality of any system!)

-

Documentation of quality requirements.

References

The workshop-based collection of quality requirements has been described by [Clements-ATAM].

-

Real-world examples of software quality requirements (currently available only in German): https://github.com/arc42/quality-requirements

-

[Clements-ATAM] contains a brief description of scenario-based quality description and details on how to set up such workshops.

2.3.3. Context-Analysis

Intent

-

Analyse external interfaces for risk, technology, business value and other factors.

-

Use the context to gain overview of the System within its business or technical environment.

-

Identify risks or problems in the immediate vicinity of the System.

Terminology

We distinguish the following terms in context analysis:

- Business Context

-

Adjacent organizations, applications, users or interfaces either requiring or providing services or data from or to the System. The business context can be used to describe the overall business process(es) the System is involved in.

- Technical Context

-

Adjacent hardware or technical infrastructure, either required by the System or providing data or events to it. When the System can be used or operated in different hardware infrastructures, there might exist several different technical contexts.

Description

Context analysis shall help identify issues associated with external interfaces, e.g. interfaces that:

-

influence critical quality requirements of the system (e.g. reliability, security, throughput, runtime performance, operation cost)

-

are overly complex

-

are brittle or fragile

-

are implemented with unsuitable technology

-

are underdocumented or poorly understood

-

transport critical data (important, sensitive, insecure)

-

transport especially huge amounts of data

-

have high operational effort

-

have high usage cost (e.g. cost-per-request or similar)

-

have high cost-of-change or high maintenance costs

-

are difficult or impossible to modify/enhance/change

-

suffer from operational failures or production issues

Note that user interfaces often belong to the context, especially with respect to the kind of data or events exchanged with users or user groups. Due to the importance of this topic, aim42 devotes an own section to it.

Example

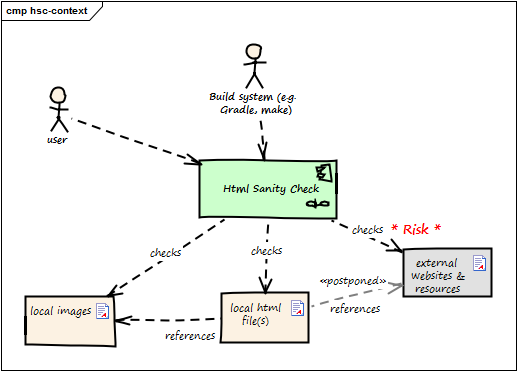

In the context diagram example of fig. Figure 10, “Example of Context View” you see some user roles and some external systems. The context diagram is accompanied by a tabular description of the elements and/or relationships. Example taken from the HtmlSanityCheck (HtmlSC) open source project.

| Neighbor | Description |

|---|---|

user |

documents software with toolchain that generates HTML. Wants to ensure that links within this HTML are valid. |

build system |

|

local HTML files |

HtmlSC reads and parses local HTML files and performs sanity checks within those. |

local image files |

HtmlSC checks if linked images exist as (local) files. |

external web resources |

HtmlSC can be configured to optionally check for the existence of external web resources. Due to the nature of web systems, this check might need a significant amount of time and might yield invalid results due to network and latency issues. |

In this example, the complete check has to be completed within 5 seconds (a quality requirement). As access to external websites or resources might be hindered by network latency or even failures, the external interface responsible for this requirement will likely contain risks.

2.3.4. Data-Analysis

Intent

Analyze and inspect the data created and manipulated by the system for its content, structure, quantity and size.

Description

In data analysis you could examine the following topics:

-

Data Actuality and Correctness (which covers integrity issues as well)

-

Analyze Data Distribution, Replication and Syncing

Analyze Data Structures

Are data structures suited to represent the problem domain?

At first, make the structure of the existing data explicit, e.g. by creating a rough sketch of a data model as either informal diagrams, entity-relationship or class diagrams. Focus should be on overview: Where and how are which kinds of data stored in which format. What are the relationships between the data elements?

Second, create an explicit model of the required domain data structures.

Some typical questions might help in finding problems:

-

structural differences between those two models?

-

differences in data types?

-

differences in plausibility or validity checking?

Analyze Data Access

Get an overview of data access paths: How is data read or written? Do the queries match their requirements, or are complex mappings or unsuitable indirections involved?

-

What queries or executed how often?

-

How large are the results in number or volume?

-

Do relationships between query results have to be computed or do appropriate indices exist?

Analyze Data Size

-

Are some parts of the data especially large?

-

Is the relation between record-size (how large is a single record?) and record-volume (how many records exists?) plausible?

-

Do critical queries involve especially large parts of data?

Analyze Data Validation

-

How is data validated? (upon write, upon read, on client, on server, redundantly, uniformly)

-

Is validation consistent with current business rules?

-

Is validation overly complex?

-

Is validation implemented with appropriate technical means?

Analyze Data Actuality and Correctness

Especially in data concerning dynamic entities like people, organizations, markets, commodities etc., facts are very likely to change over time. Such data (stored facts) might become invalid sooner or later. Other types of information (like tax records, invoices or bookings on bank accounts) are created once and remain valid forever).

|

-

Which parts of the data are subject to (what kind of) changes?

-

Are parts of the data known to be invalid or contain invalid portions?

-

Does the System handle potentially wrong or invalid data appropriately?

-

Are there (organizational or technical) processes in place that deal with data inconsistencies or faults?

Analyze Data Access Protection

-

Is there an overview what kinds of data need which level of (access) protection?

-

Is there a security concept in place covering protection against unauthorized access?

-

How are users/roles/organizations allowed to access data managed?

-

Is there a process in place to revoke access for outdated users/roles/organizations?

-

-

Is there a plan what shall happen in case of security breaches or data theft?

-

How is data theft recognized?

2.3.5. Debugging

Intent

Identify the source of an error (bug) or misbehavior by observing the flow of execution of a program in detail.

| Many software developers we met violated the basic rules of debugging. They worked in haste, took wrong assumptions, imagined-instead-of-read or simply hunted bugs at the wrong parts of the system. |

Description

Debuggers are well-known and important tools for most software developers. Yet finding bugs is often more difficult than expected - despite powerful tool support.

Approach the search for bugs, errors in the following order:

-

Get a clear and precise description of the error, the detailed wording of all error messages, log messages, stacktraces or similar information.

-

Know the context of the error: the exact version of the system, the operating system, involved middleware, hardware settings and so on. Have knowledge of the input data which leads to the error.

-

Minimize external disturbance while searching for errors, you need to concentrate and observe details. Shut off chat and twitter clients, notifications and your phone. Send away your boss to an important management mission and lean back to reflect the error. Perhaps a colleague can support you.

-

Reproduce the error in the live system. Don’t rely on the assumption you can reproduce it - make sure you can reliably really reproduce it.

-

Obtain the exact version of all required source code and all involved data.

-

Reproduce the error in development environment: This ensures your development environment is consistent to the live system.

-

Rephrase your error assumption into a question: Distinguish symptoms from the cause of the error by asking "why?" a few times.

-

Identify the building blocks which are involved in the error path.

-

Understand the error scenario: You need to know the business or technical scenario (aka the process or activity flow) of the error: Which steps within the system or its external interfaces precede the error? This step is an example of Section 2.3.28, “View Based Understanding”.

-

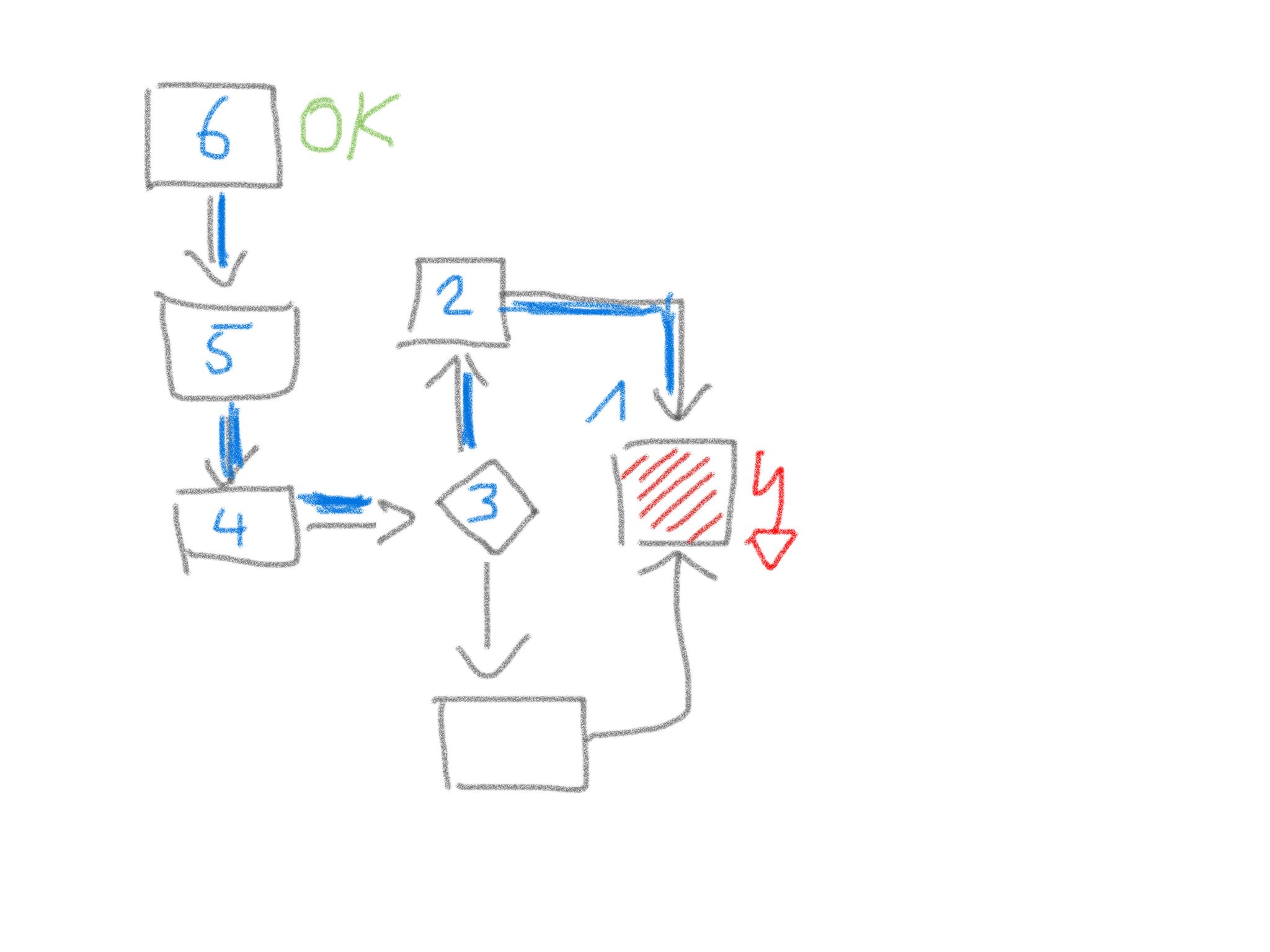

Make this scenario explicit - draw or scribble a diagram. See the diagram "Divide and conquer" below as an example. Here the error arises in building block 1. You suppose the processing within the system is spanned by the blue marked data path in which the building blocks 2 to 6 are involved. Cut the path in half and check your assumption at the transition of one half to the other (here between building block 4 and 3). If no error is observable here then the error occurs after the considered transition. Otherwise you have to look for the error before the transition.

Figure 11. Divide and conquer debugging tactics

Figure 11. Divide and conquer debugging tactics -

Plan your debugging strategy: Think of the expected outcome of every part of your scenario.

-

If you know you’re traveling to Pisa (Italy), you won’t confuse the Leaning Tower with an error.

-

-

Look, don’t imagine: Sherlock Holmes, the successful detective has formulated the golden rule of debugging: "It’s a capital mistake to theorize before one has data". Instrument the system or use step debugging. Look exactly what the messages are, read carefully.

-

Change only one thing at a time and test if the error disappears: Aim for errors with a sniper rifle, not with a shotgun.

-

Apply the 4-Eye-Principle: Describe the problem and your state of debugging to somebody else. Especially clarify all your assumptions.

Experiences

If locating errors takes very long, you’re probably facing one of the following issues:

-

You suffer from any assumption that’s currently not valid.

-

You think something instead of observing it - you let your mind deceive your eyes or ears.

-

You ignore the context: you test a wrong version, with wrong data or a wrong operating system.

Applicability

-

Whenever a bug or misbehavior of a program is reported, debugging can help to identify its root cause.

-

Debugging can help to understand a system by making its inner working explicit.

2.3.6. Development-Process-Analysis

2.3.7. Documentation-Analysis

2.3.8. Infrastructure-Analysis

Intent

Analyze the technical infrastructure of the [System], e.g. with respect to time and resource consumption or creation. Part of Section 2.3.21, “Runtime-Analysis”.

Description

Infrastructure analysis is associated to the more general Section 2.3.21, “Runtime-Analysis”, with focus on technical infrastructure for operation, test and development of the [System].

Inspect and analyse the technical infrastructure, for example the following aspects:

-

production hardware: does characteristics, type and size of the hardware suit the system and the business problem? Hardware might consist of several subsystems, like processing, various levels of storage (processor cache, RAM, flash, disk, tape or others), graphical and network interfaces and arbitrary specialized hardware

-

development and test hardware

-

software infrastructure, like operating system, required database, middleware, frameworks and libraries

It helps to measure runtime behavior agains expected or required values, for example processing time and memory consumption. Section 2.3.10, “Instrument System” can support this type of analysis.

Specialized stakeholders (like datacenter administrators, operating-system or database experts, hardware designers) can often pinpoint critical aspects of existing infrastructures from their experience.

Apply Section 2.3.28, “View Based Understanding”, especially an infrastructure overview (e.g. deployment diagram) to get an overview of existing hardware plus the associated software. Start with an hardware context and refine. Ensure you have at least all hardware-types (node-types) plus their relations (networks, buses) visible. Double-check this overview with the appropriate stakeholders.

2.3.9. Hierarchical-Quality-Model

Intent

Decompose the overall goal of "high quality" into more detailed and precise requirements, finally resulting in a tree-like structure. See Section 2.3.1, “Atam” and [Quality-Requirements].

2.3.10. Instrument System

Use retroactive modification of the executables which target cross-cutting concerns to make the existing software-base tell about it’s internals. Ways to achieve this can include aspect-oriented programming (AOP), Monkey-Patching and other metaprogramming techniques.

Intent

Find out how the system is really used and what the runtime relationships are, as well as other facts that can not be easily determined by Section 2.3.25, “Static Code Analysis” even in situation where the system under design is largely undocumented and the architecture work thus mostly relies on assumptions, interviews and lore.

Description

In many languages today it is possible to define operation that alter the behavior of certain structures in the system without modifying the original source code. In Java this is often done by byte code instrumentation, in Ruby, PHP and some other languages there are built in mechanisms to (re-) define the behavior of system classes or libraries.

In theory instrumenting the system therefore is a straightforward process:

-

Identify and describe the concern that shall be explored (e.g. object creation, function entries and exits, code execution or something else that can be described in the terms of the mechanism used).

-

Write the code that collects the data about the system behavior (e.g. sends it to a syslog, writes it to a file, sends it to a dedicated server, creates an SNMP Trap etc.)

-

Use the (language specific) mechanism to create the instrumented version of the system

-

Replace (part of) the currently operational system with the instrumented version

-

Collect the data

-

Replace the instrumented version with the previously operational version

In real life, since the mechanisms of instrumentation varies widely, specific ways must be found for each scenario.

While tools like AspectJ provide easy ways to instrument Java code and Ruby’s metaprogramming model allows for easy introduction of cross-cutting functionality the same gets harder to do with other languages. In some languages it may be necessary to modify a dynamically linked library with central operations to introduce instrumentation without modifying the original system.

|

A special form of this pattern, especially useful for interpreted languages, is instrumenting the source code manually. Basically all you do here is manually type in the code to collect the information you’re interested in. In this case it is especially important to have a tried and tested way to replace the instrumented system back with the original system! |

Experiences

| even if used cautiously, the instrumentation of the system under design can entail heavy performance penalties (on execution time, space used, bandwith etc.) so always make sure that there is a quick way to switch back to the original non-instrumented version. |

2.3.12. Organizational-Analysis

Description

Work-in-progress: collecting ideas and currently doing research, therefore still chaotic document

Software Organizations and their Effect on Systems

As Nagappan et al write: From the historical perspective, Fred Brooks in his classic book "The Mythical Man Month" provides an analogy in the chapter on "Why did the (mythical) Tower of Babel Fail?" The observation being that, the people had (1) a clear mission; (2) manpower; (3) (raw) materials; (4) time and (5) technology. The project failed because of communication, and its consequent organization. Brooks further states that in software systems schedule disasters, functional misfits and system bugs arise from a lack of communication between different teams. Quoting Brooks “The purpose of organization is to reduce the amount of communication and coordination necessary; hence organization is a radical attack on the communication problems…”. What many organization like Amazon, SoundCloud, Otto or Google do these days is to create self-contained, cross-functional teams with a high cohesion inside the team and loose coupling between the teams.

Cohesion in programming refers to the degree to which the elements of a component belong together, all the related code should be close to each other. For teams the same is true: all people with the necessary skills to create a feature should be close together.

Coupling in programming refers to the degree to which the different components depend on each other. Preferably, components should be independent from each other as much as possible. For teams the same should be true, different teams should communicate as little as possible. Adrian Cockcroft said regarding the independence between service teams that "You don’t go and have a deep discussion with the Google Maps team just to use their Maps API: it’s a reasonably stable API, you are isolated, it’s sort of versioned, occasionally it changes and you may want to do things. So basically you build your own service, you build a bounded context around the thing that your team, your 2 or 3 engineers, are building and you build a service or a group of services that interface with all the other things that your company is doing, as if they were separate companies. It’s a different bounded context. So you talk to them but you are not tightly coupled".

How can an organization be loosely or tightly coupled? What are those properties? MacCormack et al. answered this question in a Harvard Business School publication:

Table: Characterizing Different Organizational Forms

| Tightly-Coupled | Loosely-Coupled | |

|---|---|---|

Goals |

Shared, Explicit |

Diverse, Implicit |

Membership |

Closed, Contracted |

Open, Voluntary |

Authority |

Formal, Hierarchy |

Informal, Meritocracy |

Location |

Centralized, Collocated |

Decentralized, Distributed |

Behavior |

Planned, Coordinated |

Emergent, Independent |

Of course this is not black and white, there’s always some place in between.

Conway’s Law and what to do about it

In 1968 Conway observed that "organizations produce designs which are copies of the communication structures of these organizations (If you have four groups working on a compiler, you’ll get a 4-pass compiler)". Around 2006 many companies had a frontend, backend and middleware department reflecting the three-tier architecture they were building. Modern companies have individual deployable services built by cross-functional teams.

James Coplien wrote in his book that "If the parts of an organization (e.g. teams, departments, or subdivisions) do not closely reflect the essential parts of the product, or if the relationship between organizations do not reflect the relationships between product parts, then the project will be in trouble… Therefore: Make sure the organization is compatible with the product architecture".

David Parnas (1972, 1978) argued that it is easier to split development work across a group if people can work independently and in parallel. To support parallelism, Parnas encouraged developers to avoid sharing assumptions and data. Specifically, he contended that every developer’s task assignment should lie within a product module that is “characterized by its knowledge of a design decision that it hides from all others” (1972: p. 1056)

That means that the flexibility of an organization is important to effective design and operations. It also means that you first create your architecture and then form the organizational communication around it. This is not as crazy as it sounds, because existing departments can still exist, but the people inside those departments need to be insourced into the newly formed product teams representing end-to-end the individual services or components of the system.

If you want to improve your architecture you need to improve your organization as well

TODO: collect more examples of org transformation

Since systems usually live longer than corporate structures, it is important that managers keep their organisation flexible. An example for organizational flexibility is Soundcloud. They experimented with different organizational models to find the one that fit product development best. That experimentation is possible is important to note - many organizations don’t do that. They moved over a couple of years from the classical approach of separate development and operation teams to a structure where teams can act autonomously and build and run their systems without handovers to other teams. Since there is still a need for centralized work they created a production engineering team which takes care of common infrastructure ("run the system that runs systems") and do internal consulting in case a team needs help. For some companies experimentation is really hard, e.g., a large insurance company has 800 people in an operations department and claims because of that "You build it, you run it" is not possible, the company could still keep the departments, but in fact it practices insourcing operations people (or UX specialists or business analysts, etc.) into the product teams. That way the departments, which are hard to change, still exist, but there’s still a good chance to create a cohesive team structure.

An example of a popular change of software producing organizations is the move from operations/development/business/testing silos to functional silos (e.g. a cross functional team responsible for product search), because the optimized process-based organization is horizontally focused on outcomes, not vertically oriented around skills like testing, development and operations.

TODO: provide more case studies, e.g. UK Government Digital Services, Google, Amazon, ING, Otto

Organizational Structure and its Effect on Quality

Microsoft did a large study on how organizational structure affects software quality. The effect of organizational structure on quality is higher than code churn, code complexity, code coverage or bugs found before releasing the software. Microsoft looked at a few organisational metrics:

-

Number of Engineers (NOE): The more people who touch the code the lower the quality. This is of course something you need to balance. Of course if there is only one person who works on a component, the likelihood of conceptual integrity and few bugs is high (if the persons work quality is also good). If 300 people change the component it is much harder to keep conceptual integrity. However, a company doesn’t want knowledge islands, depending on the knowledge of one single person, therefore the company should find the right balance here. Netflix recommends 2-3 people per component, Amazon has the "Two pizza team" rule (only so many people should work on a component or service who can be fed by two pizzas)

-

Number of Ex-Engineers (NOEE): A large loss of team members affects the knowledge retention and thus quality. A similar study at eBay found out that a resource pool (a pool of developers where projects can take people out) led to very bad quality

-

Edit Frequency (EF): The more source code edits to components the higher the instability and lower the quality (sure, if I never touch code which works I won’t introduce new bugs. But we know that we need to test (heavily) changed source code with a greater intensity than less changed parts of the code)

-

Depth of Master Ownership (DMO): The lower the level of ownership the better the quality. What they mean with that is that a component should have only one clear owner and that owner (can be a team) needs to report to only one leader and not many.

-

Percentage of Org contributing to development (PO): The more cohesive the contributors organizationally the higher the quality. Means that it is important to create a team where its members share a common culture, focus and social cohesion.

-

Level of Organizational Code Ownership (OCO): The more cohesive the contributors (edits) the higher is the quality. Means that it is important to create a team where its members share a common culture on how to write and design a system.

-

Overall Organization Ownership (OOW): People who change often the same source code should sit together closely or at least know each other well and have an unproblematic way to communicate. E.g. working on a particular piece of source code with a person in another timezone lowers quality, pair programming leads to higher quality.

-

Organization Intersection Factor (OIF): The more diffused the different organizations contributing code, the lower the quality. If totally unrelated teams contribute to the source code of a component and those changes are small (< 10% of all changed lines) your quality declines. Try to not have too many contributors who only contribute little.

Statistical existence and empirical evidence stemming from organizations research and social-network analysis reveals that low organisational quality connected to software can be found in the relationships across the following combinations (combined org metrics appear in brackets): TODO: still a bit unclear

-

Low quality: Code is often changed AND the number of developers changing that code is high (EF ↔ NOE)

-

Low quality: Code is often changed AND the number of developers who changed that code left the team/organization is high (EF↔ NOEE)

-

High quality: The team is cohesive and shares a common culture/focus/social cohesion AND they own their code completely and make their own decisions (OCO ↔ DMO)

-

High quality: The team shares a common culture/focus/social cohesion AND shares a common coding culture (OCO ↔ PO)

-

High quality: The team sits together (or low distance or can easily interact) AND shares a common coding culture (OOW ↔ PO)

-

High quality: The team sits together (or low distance or can easily interact) AND they share a common culture/focus/social cohesion (OOW ↔ OCO)

Value Stream Mapping

Value stream mapping is a lean-management method for analyzing the current state of events that take a product or service from its beginning through to the customer. Examples in the software world would be all the steps needed from having an idea to implementing and deploying it (which usually requires a business case, putting it on a roadmap, write requirements, create UX prototypes, implementation, test in between).

Two key metrics associated with value stream mapping are value adding times and non value adding times (=waste). If something does not directly add value as perceived by the customer, it is waste. If there is a way to do without it, it is waste. Mary Poppendieck describes seven types of waste in software development: waiting, partially done work, extra process, extra features, task switching, defects and motion.

Often, the value stream is broken in a few places where small changes can bring large improvements. Those are the places you need to find and where you can improve the architecture. A great example how value stream mapping led to a large architectural improvement is Soundcloud’s move from monolith to Microservices and from low cohesion and strong coupling of Departments to high cohesion and loose coupling of Departments (http://philcalcado.com/2015/09/08/how_we_ended_up_with_microservices.html).

How to do a / examples of value stream mapping: TODO

Old stuff - Look for:

-

violations of Conways' law

-

over or under-regulated processes

-

organization with overly strict constraints

-

organizations lacking constraints (anarchy)

-

orga-problems leading to IT problems

2.3.13. Social Debt

Social debt amounts to additional project cost connected to sub-optimal organisational and socio-technical decisions

Intent

Evaluate and track the welfare and health of a software development and operation organization or community such that additional project cost can be avoided or somehow managed.

Description

Organization, coordination and cooperation are critical forces behind software development and operation. Through well-thought protocols and cooperation guidelines, software architects, developers as much as entire organizations try to orchestrate proper coordination and cooperation but many times such attempts are burdened by sub-optimal organisational decisions, e.g., the adoption of a process model that is not compatible with developers and operators’ background and mindset or even collaborating with an organization that does not and cannot share the same values of the organization in question. These circumstances often result in additional project cost in the form of recurrent delays, condescending behaviour or worse.

Evaluating these circumstances together, trying to minimise their impact on software development and the quality of resulting software products is currently object of intensive study.

The goal for social debt in the next few years of research is that of reaching a crisp definition that contains the essential traits of social debt which can be refined into practical operationalizations for use by software engineering professionals in need of knowing more about their organizational structure and the properties/cost trade-off that structure currently reflects.

Experiences

As previously mentioned, we found three recurrent series of circumstances in which architecture decisions and the process of architecting reportedly generated social debt.

-

Lonesome Architecting: we observed this pattern manifesting when non-architects are forced to make decisions while actual architects are "too few and far apart". One of the software architects reporting this condition in industry also complained that he and his colleagues had […] not enough time to dedicate to decision-making (and related changes) as well as properly disseminating architecture decisions. Some of the most common consequences we found resulting from this pattern are: (a) decision unawareness; (b) misalignment between product version and architecture; (c) lack of awareness on the product’s needs; (d) overly fast decision-making to "patch-up". The debt in this case is associated to delays needed to find out about decisions and apply the necessary modifications, possibly rewriting code with considerable waste. Also, from a social point of view, this circumstance results in loss of project vision (i.e., frequent quotes were "what are we doing? what does the product need for its improvement?") with resulting frustration and mistrust.

-

Obfuscated Architecting: Obfuscated architecting takes place when multiple sub-groups emerge in a development network without a harmonised organisational and socio-technical vision necessary to operate in the network. We observed this pattern manifesting when new or changed architecture decisions imply implementation changes that necessitate new people to be included in the development network (e.g., different skills are needed). we observed this pattern in presence of multiple products (both legacy and new) being operated together but in the process of being integrated. New people to be included in the development network lacked the frame of mind and vision needed to understand and cope with the legacy product. This obfuscates the communication of architecture decisions. Some of the most common consequences we found resulting from this pattern are: (a) single communication points for architecture decisions - many developers eventually felt left out of the development network when it came to software architecture, since they could not reach architects properly, this led to time waste and resulting developers' frustration; (b) circumstances indicating socio-technical code-churn.

-

Architecting by Osmosis: In layman’s terms, osmosis refers to the process of permeating a solvent through a semi-permeable (series of) membrane(s). By comparison, architecting by osmosis means making architecture decisions using knowledge that is filtered through many semi-permeable communication links. we observed architecting by osmosis manifesting when the following sequence of events occurs: (1) the effects of certain decisions reach clients and product operators but result in inoperable software; (2) operators, pushed by clients, share malcontent with developers and suggest technical changes; (3) developers evaluate (and sometimes partially implement) possible technical changes and suggest change to architecture decisions; (4) architects make necessary changes in decisions with knowledge that was partially filtered by all communication layers in the development network.

References

-

Damian Tamburri, Philippe Kruchten, Patricia Lago, Hans van Vliet: What is social debt in software engineering? In: Cooperative and Human Aspects of Software Engineering (CHASE), p. 93–96, 2013, Washington, DC. https://jisajournal.springeropen.com/articles/10.1186/s13174-015-0024-6

-

Tamburri, D. A. & Nitto, E. D. (2015), When Software Architecture Leads to Social Debt., in Len Bass; Patricia Lago & Philippe Kruchten, ed., 'WICSA' , IEEE Computer Society, , pp. 61-64 .

2.3.14. Qualitative Analysis

Intent

Find out (analyze):

-

whether quality requirements can be met by the system,

-

which specific quality requirements are risks with respect to the current architecture,

-

which specific quality requirements are currently non-risks

Description

-

Capture quality requirements to ensure you have explicit, specific, valid and current quality requirements available - preferably in form of scenarios.

-

Prioritize these scenarios with respect to business value or importance for the authoritative stakeholders.

-

For every important scenario:

-

analyze the architectural approach the system takes,

-

decide whether this approach seems appropriate or risky

-

Experiences

-

Conducting workshops with a variety of stakeholders often leads to intense and productive communication.

Applicability

Use qualitative analysis to support in the following situations:

-

You need to analyze which specific quality requirements are at risk and which will most likely be met by the system.

-

You have a variety of different stakeholders or groups which can all impose quality requiements - but have not yet agreed on a common set of such requirements.

-

A current and understandable collection of specific quality requirements for the system is missing.

References

-

Section 2.3.1, “Atam”. Published by the Software Engineering Institute in numerous whitepapers and books, especially [Clements-ATAM].

2.3.16. Pre Interview Questionnaire

Intent

Prior to interviewing stakeholders, present them with a written questionnaire, so they can reflect in advance.

Description

A specialisation of questionnaire - targeted to be used by stakeholders (aka your interview partners). As with the more general questionnaire you need to collect appropriate topics, questions and suggestions. Remember to apply stakeholder-specific communication: It might be useful to create different questionnaires per stakeholder or a group of stakeholders. This can lead to more work for you as interviewer, but will also lead to better interview results.

Mix open and closed questions:

-

open questions require stakeholders to formulate answers on their own. For example "How did you…?" or "Please explain…?"

-

closed questions ask stakeholders to select from several predefined choices.

Include a "Comments/Remarks" section at the end of the questionnaire, so stakeholders can comment on topics you did not consider in advance. The Section 2.3.16, “Pre Interview Questionnaire” shall be handed over to the appropriate stakeholders in advance, a few days before the interview. As these documents will be read and processed by external and potentially critical stakeholders, you need to care for several details:

-

Stakeholder specific terminology: Ensure your questions will be understandable by the target audience. See [Stakeholder-Specific-Communication].

-

Ensure nice layout and (visual) readability. Your questionnaire shall be fun to work with.

-

Ensure timely delivery to your stakeholders, so they have enough time to think about their answers. Do never force your stakeholders to answer questions under time pressure.

2.3.17. Pre-Mortem

Description

In software projects, post-mortems are often used to learn from a failed projects with the goal to avoid the identified problems in the future. But why wait for so long?

In a pre-mortem workshop, stakeholders are imaging that they are living some months or years in the future to analyse their current project that had been failed miserably. They are explicitly encouraged to speak about the main points that did go wrong from the future’s perspective.

The organization of the workshop for a running software project can be done as follows:

-

Preparation: Participants are gathering in a room with a big empty wall. For each participant, a pen, around ten post-its and three adhesive dot markers are handed out.

-

Scenario: The workshop facilitator tells all participant that they are now in the future and their project has failed. It failed so miserably that it was a total disaster. The project members don’t speak to each other anymore and the whole company gained a bad reputation.

-

Task: To come over this heavy shock, all former project members are summoned together to spot the reasons for this fiasco. Each of the participants should write down the concrete reasons that led the complete failure (within a 5 minutes timebox, one reason on one post-it).

-

Communication: Each participant puts their post-it notes on the wall and explains each identified reason in 1-2 short sentences.

-

Grouping: The facilitator groups the post-its together to main topics and names these main topics with additional post-its that placed onto the emerged groups.

-

Prioritization: All participants can now use the dot stickers to mark the three most important topics (no accumulation allowed) from their point of view.

-

Discussion (optional): Possible solutions that address the three most important reasons are discussed and the next steps to implement the solutions are defined.

Experiences

A pre-mortem session combines the potential of negative thinking and creative thinking in a relaxed environment. Stakeholders are rewarded for the identification of issues instead of being played down or marked as naysayers. This leads to a huge amount of input from all kind of participants that would otherwise be quite regarding potential problems. The viewpoint from the future frees participants from thinking just in short terms and provides.

Applicability

Pre-mortem is a complementary method to interviews with stakeholders, that is very efficient due to the focused scenario and multi-perspective format.

Consequences

In the evaluate phase, the identified reasons can be a great starting point for further analysis.

Additionally, identified reasons from a pre-mortem session can be seen as potential risks and thus be included and tracked in the project’s risk management.

References

-

Gary Klein - Performing a Project Premortem (Online article)

2.3.18. Questionnaire

Description

Prior to taking interviews with stakeholders, formulate questions covering the topics or areas of information that:

-

might be important for you

-

your interview partners (== the stakeholders) have knowledge or experience in.

The questionnaire can be specific for a single interview or be a template for multiple interviews.

Experiences

I (Gernot Starke) used such questionnaires within several technical reviews and audits. They helped me to stay on track, cover a multitude of topics, even when I conducted several interviews consecutively.

I usually printed out one copy of the questionnaire for every interview I had planned, so I could sketch notes - and always had the context of my notes already printed, saving a lot of note-taking effort.

Applicability

Whenever you interview stakeholders, a thorough preparation will lead to better results, interviewers will be less likely to forget important topics.

2.3.20. Root Cause Analysis

To find mistakes is not enough. It is necessary to find the cause behind the mistake and build a system that minimizes future mistakes.

Intent

Explicitly differentiate between symptom and cause:

-

Identify root causes of symptoms, problems or issues

-

Trace a problem to its origins

Description

Some people fight problems, not their real cause: When faced with a problem our brains tend to start immediately to search for proper solutions for exactly this problem. For instance, if our application regularly crashes with Out-Of-Memory-Errors it might be a reflex to increase the memory settings. Instead we should ask if this is really the problem or only a symptom of the real problem, e.g. a programming failure in memory releasing. With an iterative process of asking "Why?" the causal chain must be traced down to the root cause.

Experiences

|

Users of a system complained about low performance. Developers started tuning the database, tweaking the application server, optimizing inner loops and so on (you pretty well know what I mean). That did not solve the issue. After applying Section 2.3.26, “Take What They Mean, Not What They Say” we found out that users complained about low performance in data entry, as they had to switch back-and-forth between different regions on their input-screens. The actual cause of their complaint was the improper field order in their input forms. After adapting this order by simply dragging fields/labels around the screen, users were perfectly happy with the systems. |

Related Patterns

-

Section 2.3.26, “Take What They Mean, Not What They Say”, maybe what they told you was not the real problem.

2.3.21. Runtime-Analysis

Intent

Analyze the runtime behavior of the [System], e.g. with respect to time and resource consumption or creation.

Description

-

Ask stakeholders about perceived runtime behavior - double check by measuring.

-

Measure runtime behavior, e.g. with profilers, logs or traces.

-

Inspect artifacts created at runtime (e.g. logfiles, protocolls, system-traces) for information about problems, root-causes or system behavior.

-

Perform Section 2.3.8, “Infrastructure-Analysis” to learn about the technical infrastructure.

- WARNING

-

Measuring might influence behavior. That can be especially disturbing in multi-threaded, multi-user or multi-core applications.

2.3.22. Software Archeology

Description

-

Make sure you have the complete code, scripts, frameworks and tools required to build the system.

-

Ensure you have access rights to all required systems, at least to version control, database, participating servers etc.

-

Ensure you can build the system from scratch (compile, load all dependencies, link or whatever steps are necessary).

-

Practice View-Based Understanding by sketching notes or diagrams. Get an aerial view, a glimpse of the topmost, biggest structures in the code.

-

Try to understand the terminology of the original authors by scanning code and searching for multiple occurrences. Start creating a glossary.

-

Introduce tracing or logging statements. Aspect-oriented tools (like AspectJ) might be helpful.

Experience

-

By examining the build scripts of the software project or the job definitions on a continuous integration server, you can build the software and the needed testing environment on your own.

-

With simple tools like the

git shortlog -nscommand you can easily check parts of a software system for potential loss of knowledge based on the commits per developer. -

By using word clouds, you can quickly extract the most occurring terms in your source code and start creating a glossary for the most prominent words.

Applicability

You have to understand a system with:

-

little or no current documentation.

-

no knowledgeable technical stakeholders or development team available

References

-

[Hunt-Archeology], pleasant introduction without adherence to any strict pattern template. Recommended reading.

-

[Moyer-Archeology], short report.

-

[OORP], page 53ff. , "Read all the Code in One Hour" suggest to read all source code in a short period of time to get a rough feeling about the software system.

-

[OORP], page 97ff. , "Speculate about Design" suggest to create diagrams based on hypothesis and progressive refinement.

-

[Tornhill-XRay] shows many ways of using version control systems to recreate knowledge about software systems.

2.3.23. Stakeholder Analysis

Find out which people, roles, organizational units or organizations have interests in the [System].

Description

Get an initial list of stakeholders from project management.

Distinguish between roles and individuals. Some stakeholders need to be adressed individually, for roles it might be sufficient to identify any of several possible representatives.

Take the following list as examples of roles:

top-management, business-management, project-management, product-management, process-management, client, subject-matter-expert, business-experts, business-development, enterprise-architect, IT-strategy, lead-architect, developer, tester, qa-representative, configuration-manager, release-manager, maintenance-team, external service provider, hardware-designer, rollout-manager, infrastructure-planner, infrastructure-provider, IT-administrator, DB-administrator, system-administrator, security- or safety-representative, end-user, hotline, service-technician, scrum-master, product-owner, business-controller, marketing, related-projects, public or government agency, authorities, standard-bodies, external service- or interface providers, industry- or business associations, trade-groups, competitors

Include those stakeholders in a simple table:

| Role/Name | Description | Intention | Contribution | Contact |

|---|---|---|---|---|

name of person or role |

responsibility for System |

intention for/with/against System |

what can/will/need they contribute to improvement of System, optionally or required |

how to contact. For roles, name a primary contact person. |

Experience

There are often more stakeholder roles involved than it is obvious. Especially those people not directly involved in project- or development work sometimes are forgotten, e.g. standard bodies, external organizations, competitors, press or media, legal department, employee organization.

References

-

Section Stakeholders of arc42-template, Introduction and Goals

2.3.24. Stakeholder Interview

Conduct personal interviews with key persons of the [System] or associated processes to identify, clarify or discuss potential issues and remedies.

Intent

Learn from the people who know or care about the [System] and everything around it.

Description

Conduct a Section 2.3.23, “Stakeholder Analysis” first to find out whom to interview.

Apply a breadth-first strategy, speak with people from different departments, roles, management-levels. Include at least business-people, IT- and business manager, end-user, developer, tester, customer-service, subject-matter-expert.

Plan the interview dates at least 5-10 days in advance, choose a quiet location, make sure nobody can overhear your interviews.

If possible, send out a stakeholder- or role-specific Section 2.3.16, “Pre Interview Questionnaire” some days in advance.

Ensure a no-stress and no-fear situation. Never have top-managers or supervisors be present during interviews of their subordinates. Explain your positive intent and your role in the improvement project. Have water and cookies at hand. Make your interview partners feel comfortable and relaxed. Be honest and humble. Never ever promise something you cannot guarantee!

Ask open questions.

Tape or protocoll questions and answers.

Some typical questions, e.g.:

-

What is your role in this project?

-

What is great about the [System], the business and the processes?

-

What worries you about the [System]? What are currently the 3 worst problems?

-

What problems or risks do you see in (business/development/operation/usage…)?

-

Can you show/demonstrate this problem?

-

How can I reproduce this problem myself?

-

When/where does it occur?

-

What are the consequences of this problem? Who cares about this problem?

-

How can we/you/somebody overcome this problem?

-

-

How are the processes working? What are the differences between theory and practice?

-

If you had time, money and qualified people, what top-3 measures do you propose?

-

Is there anyone you think we need to speak with who isn’t on our list?

-

How would you like to be involved in the rest of this project, and what’s the best way to reach you?

In case people told you about severe problems, try to experience/see those problems yourself. At the end of the interview, give a short feedback and summarize important results to ensure you understood your interview partner correctly.

Experience

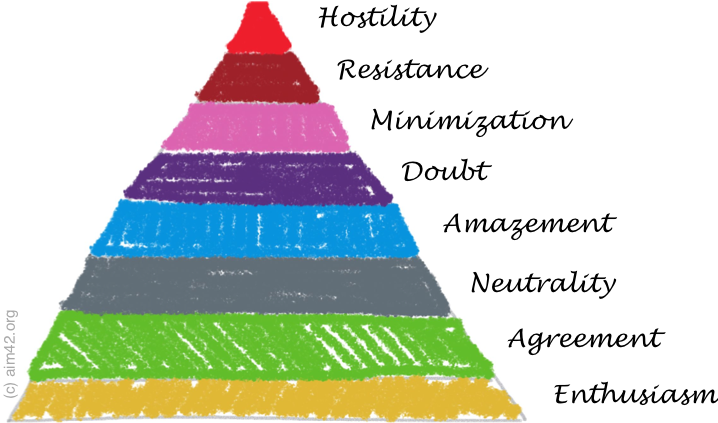

Expect the usual difficulties in human communication: people will love or dislike your work, the interview or the intent of your endeavour.

-

Some people will hold back information, either accidently or deliberately.

-

You have to create the big picture yourself. Most people tend to focus on their specific issues.

-

Double-check critical statements, as some people might exaggerate.

Related Patterns

-

Section 2.3.23, “Stakeholder Analysis”, to identify important and authorative stakeholders.

-

Section 2.3.18, “Questionnaire”, especially Section 2.3.16, “Pre Interview Questionnaire”.

References

-

http://boxesandarrows.com/a-stakeholder-interview-checklist, nice checklists for several kinds of stakeholder interviews

2.3.25. Static Code Analysis

Intent

Static Analysis can serve two purposes:

-

Analyse source code to identify building blocks and their dependencies, determine complexity, coupling, cohesion and other structural properties.

-

Detect certain types of bugs, dangerous coding patterns and bad coding style.

Description

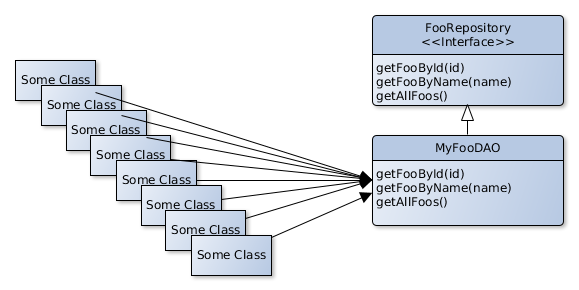

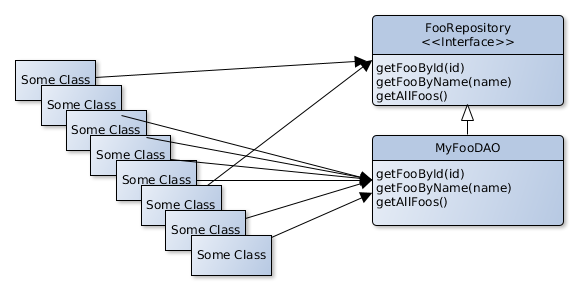

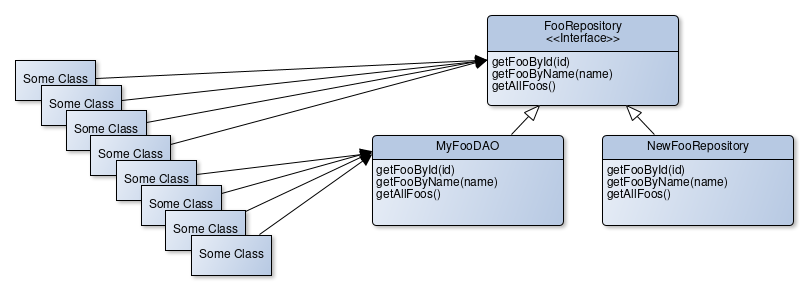

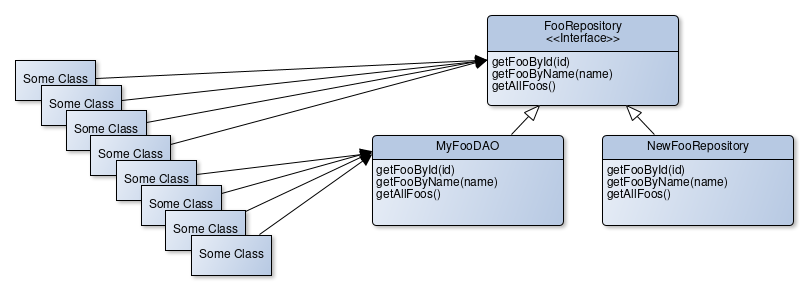

Use source code analysis tools to analyse static properties of the system’s source code, e.g. the following:

- Coupling and dependencies

-

Where do the building-blocks (e.g. classes, packages, modules, subsystems) of your system depend upon? What are the intra-system call- and communication relationships?

Experiences

-

Many projects (commercial and open-source) apply automated static code analysis as part of their build processes.

Applicability

Apply static code analysis when the code base is medium sized or large and the appropriate tools are available.

-

Many metrics and tools are tailored to object-oriented programming languages.

-

Dynamically typed languages often have limited tool support.

References

-

SonarQube, LGPL-licenced open-source platform to analyze code.

-

JDepend, open-source Java dependency checker.

-

Sonargraph, static code analyzer focused on software structure and architecture.

2.3.26. Take What They Mean, Not What They Say

Description

Natural language has the risk that semantics on the sender’s side differs from semantics of the receiver: People simply misunderstand each other because the meaning of words differs between people.

Therefore: what people mean/want/need is sometimes not what they say. This is due either to

-

semantic differences on sender and receive sides,

-

stressful or inconvenient communication situations (e.g. "the boss is present", communication under pressure),

-

people are distracted or tired,

or other reasons.

NLP (neurolingustic programming) practitioners recommend to mirror things you hear to your communication partners in your own words. This might facilitate understanding.

When you have the slightest impression or indicator that your communication partner does not or can not communicate their real intention, you should clarify by giving explicit Section 5.11, “Fast Feedback”.

Applicability

Apply this pattern whenever you communicate verbally to other people (aka stakeholders) e.g. in meetings, conferences, phone calls etc.

Especially when verbal communication differs from paralanguage or gestures, you should clarify what your communication partner really meant.

Consequences

-

Improved understanding between stakeholders.

-

Section 5.9, “Explicit Assumption”, instead of implicit ones.

Related Patterns

-

Section 5.11, “Fast Feedback”, as you give immediate feedback within ongoing communication.

-

In every Section 2.3.24, “Stakeholder Interview” you should apply this pattern.

-

Section 2.3.23, “Stakeholder Analysis” to find out, who are the important stakeholders you should apply this pattern to.

2.3.27. User-Analysis

Intent

Get an overview of user categories or groups, their goals, requirements and expectations. Find out about issues users have with the system. Related to Section 2.3.23, “Stakeholder Analysis”, Section 2.3.3, “Context-Analysis” and Section 2.3.19, “Requirements-Analysis”.

In contrast to the other analysis practices, user analysis can also include useability, layout or design considerations.

2.3.28. View Based Understanding

Intent

Understand the inner workings and internal (code) structure of of the systems. Document (and communicate) this via architectural views, especially the building-block view.

Description

-

Apply [arc42] views

-

Interview technical stakeholders

-

Start either from the

-

business context, mainly the external business interfaces

-

technical context, the involved hardware and network structure

-

known technology areas, i.e. products, programming languages or frameworks used

-

Applicability

Use view-based-understanding when:

-

System has a medium to large codebase

-

Structural understanding of the code is limited: only few stakeholders can explain and reason about the code structure

-

Documentation of the code structure is not existing, outdated or wrong

-

Long-term maintenance and evolution of the system is required

Consequences

-

Explicit overview of the system context with the external interfaces.

-

Overview of the larger units of source-code (subsystems, high-level components) and their relationships.

References

-

4+1 architectural view model by Philippe Kruchten

2.3.29. Bus factor

The bus factor is a measurement of the risk resulting from information and capabilities not being shared among team members, from the phrase "in case they get hit by a bus" (Wikipedia).

Intent

Improve the structure of a system or its documentation so that the organisation is not at risk if certain key people leave.

Description

In an ideal world, the whole team owns the all code and anybody can safely change anything. Often this is not the case, because

-

the (monolithic) system has already grown so large and complex that knowing all the details and concepts are too overwhelming for a single person

-

requirements of certain parts of the domain are always given to the same developer (by the management), because this developer knows everything about the domain and can therefore implement these requirements very fast. Any newbie would need a lot of time to achieve small things and the organisation takes on this organisational debt (they gain an advantage short term, but it could be very problematic long term), because they don’t want to invest in distributing knowledge

-

developers want to increase their employability. If only they know about a critical part of the system, they cannot be fired or put under pressure easily. Therefore they want to protect those parts and try hard to not let others work on the code. Sometimes this even leads to deliberate complexity - complexity that has been introduced to obfuscate better understanding by other developers.

Usually, a team has already a good grasp on which parts of the system certain developers own the knowledge almost completely. It is vital to get some numbers through mining repository data, e.g. if you think that dev A is the only one knowing about some security code, then you can analyze the commit history of the security package. Adam Tornhill gives a lot of ideas and also code snippets on how to do that in his book "Your Code As a Crime Scene". What he does is looking at the changed lines of a package over all the commits of a certain time frame. If a large amount (e.g. >80%) of those commits is coming from a single developers, you should act.

3. Evaluate

3.1. Goals

Make the issues, problems and risks found during the analysis comparable by estimating or measuring their value (that’s why we call this activity evaluate):

-